Next Page

Looking in said eb-activity.log we have the following line:

in the eb-activity.log

This stack is a node application stack and we didn't write this script. So next step was a P1 AWS support ticket, thankfully we have a level of support that results in fast answers.

AWS confirmed it was an issue with ebnode.py file that 50npm.sh calls.

Specifically:

"Elastic Beanstalk passes STDOUT and STDERR of npm install to Python and Ruby based logger scripts. These loggers expect NPM modules to conform to standards and use UTF-8 characters."

The cause

The root cause here was that some dependency we had in our application contains an NPM module that is using some non UTF-8 charaters and that causes EB to break on a deployment of the new version.

The AWS provided solution

The AWS provided workaround is to modify the ebnode.py script to redirect NPM install output to /dev/null

Specifically look for a lines like

and modify to:

Then the updated ebnode.py would need to be uploaded into S3 (the elasticbeanstalk-$REGION-$ACCOUNTID bucket probably makes the most sense) and using .ebextensions to pull the new ebnode.py file and overwrite the default one.

If your thinking messy and hard, yes and yes.

How i fixed it

I took our whole application and moved it to a docker container. Then I deployed it to AWS Fargate which is the new "run a container without worrying about hosts" service.

I've been informed that AWS is planning on fixing this, however, sounds like it won't be fixed until they bump the node EB environment again.

So if your running a node application in elastic beanstalk be warned, this could happen to you. In our case, it wasn't even a dependency we updated! It was a dependency of a dependency.

This was another one of those things that took me longer to implement than I expected!

I write most of my javascript using typescript these days, so that's what I'm showing in my examples. If you want raw JS, see my GitHub or tsc (type script compiler) the example code.

First, let us look at the encryption.

I'm going to supply the key in a format called ASCII armour .

In this format a public key will start with -----BEGIN PGP PUBLIC KEY BLOCK-----

When using this format, pulling the key in requires using the readArmored method:

Ok now we need to deal with a file. Unfortunately openpgp.js only supports files in Uint8Array, but fs.readFile by default will return a node buffer. Thankfully this isn't that hard!

Next, we build the request object for openpgp.js. Note, how we actually reference the .keys item attached to the openpgpPublicKey object response of readArmored key

The armor: false - setting tells openpgp js not to ascii armor the file output. Hence, We will get a binary object NOT a string. openpgp does support a full file in ASCII armour, I'm unsure if that would be a good idea for a file of any significant size.

Setup complete, now we perform the actual encryption!

This performs the encryption, now we need to get the file object out of the system, that requires calling message.packets.write()

Right now we have a constant, encryptedFile that is of type Uint8Array. Lucky for us fs.writeFile supports Uint8Array as an input! So we simply write it back to disk!

Phew ok, encryption done.

Guess we'd better be able to decrypt, starts much the same.

As private keys can have many sub keys we have to pick one. Hence .keys[0] on readArmored

There is another new thing, .decrypt. Private pgp keys are encrypted, so we have to perform a decrypt using our private key password.

Lastly, we do the cast from buffer to Uint8Array again on our file read

Next is our openpgp request options. Little different to encrypt which took me ages to figure out!

Fairly obvious now you see it, right?.. The format binary is important telling openpgp.js that we are dealing with a binary, not a ASCII armoured message.

Now we call the actual decrypt. Again this is an async function, so I'm using await here and the raw binary file will be in the .data attribute contains the Uint8Array that is our file.

This we can write directly back to disk as fs supports Uint8Array as an input.

Last but not least, it is probably a good idea to include the following at the start of your file, these operations prevent openpgp.js printing version and comment information into any files.

Once you have seen it and had it explained it is not that hard. I ended up working this out by reading the openpgp.js unit test code... Not ideal really, so I hope this helps someone out there!

One word of warning. As we're reading files into buffers here and not handling them as streams (I don't think openpgp.js supports streams) there is a risk we could run the system out of memory on a file larger than available memory. I'd already check input file size vs available memory if you can.

All the source is available at https://github.com/adcreare/nodejs-demos/tree/master/openpgp.js

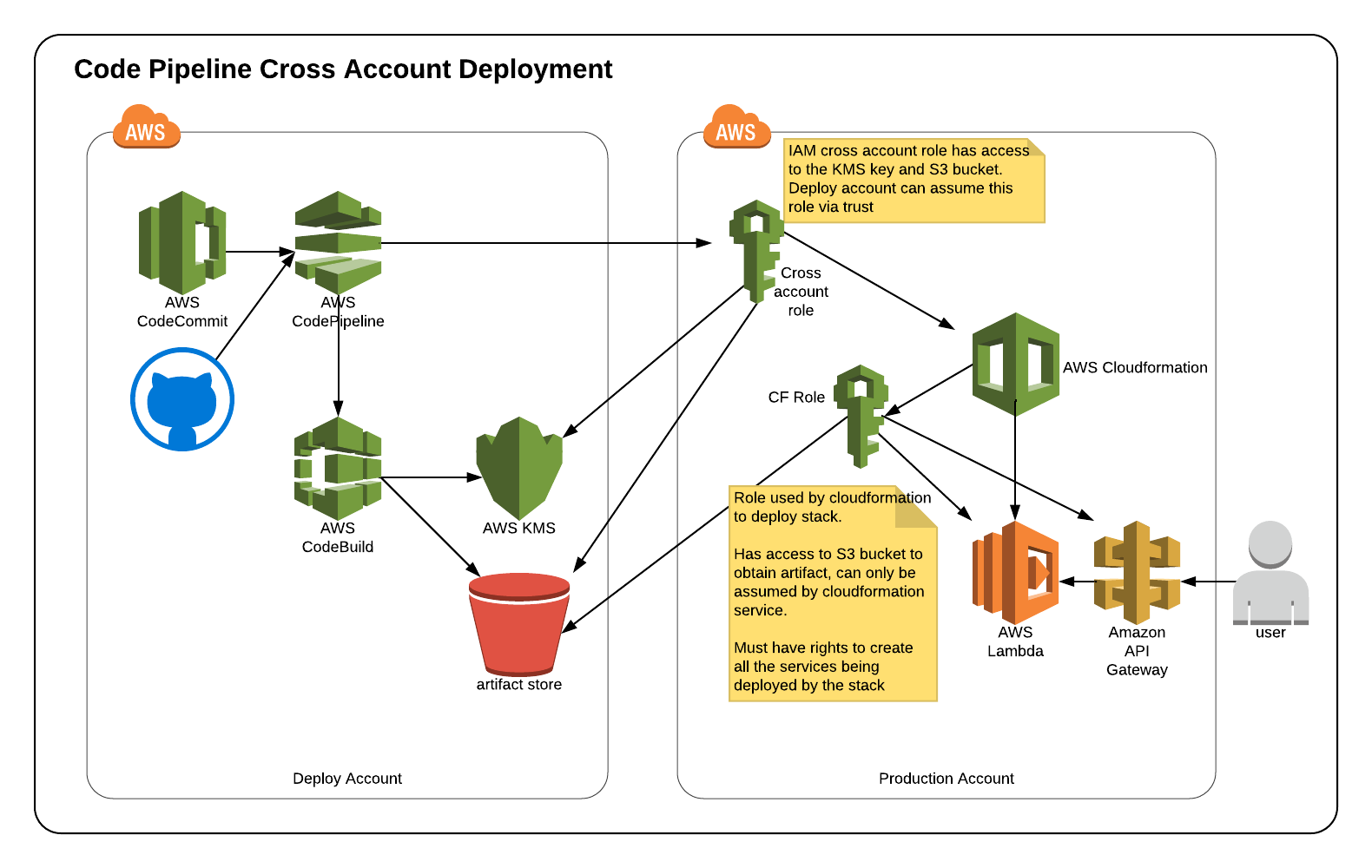

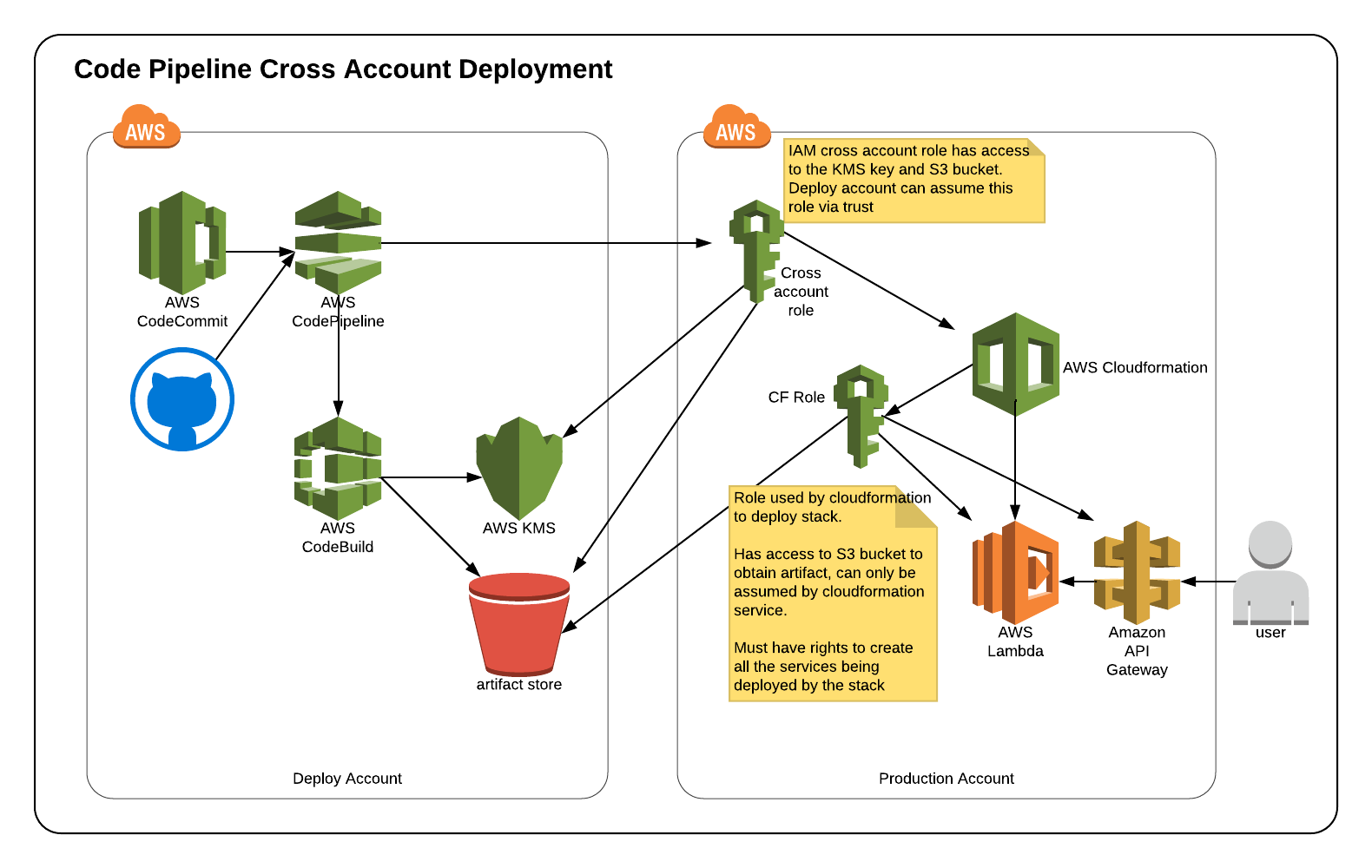

Recently I started out to build a cross account pipeline, where code would get built in one account, and then deployed to my development, staging and production accounts using CloudFormation.

This was where the trouble started, it is actually, not that straight forward. AWS have documentation on the subject, which is a bit shit frankly.

Then the error messages you receive from CP when this doesn't work, really give zero guidance,

Eventually I managed to get it working and figured I'd write some documentation for myself and so that others attempting this might do it faster and with less frustration than I had.

You can find it over at my github https://github.com/adcreare/cloudformation/tree/master/code-pipeline-cross-account - Code pipeline doesn't support cross account deployments via the console, we are left with either CLI commands or CloudFormation. I want with CloudFormation yml files and this repo contains sample templates, you can use as a starting point for your pipeline.

I also put together a diagram to show how all the pieces connect together, which is the hardest part!! Obviously this can be expanded to N number of accounts by duplicating configuration for additional accounts.

Good luck! Once it is working, its great and works as advertised.

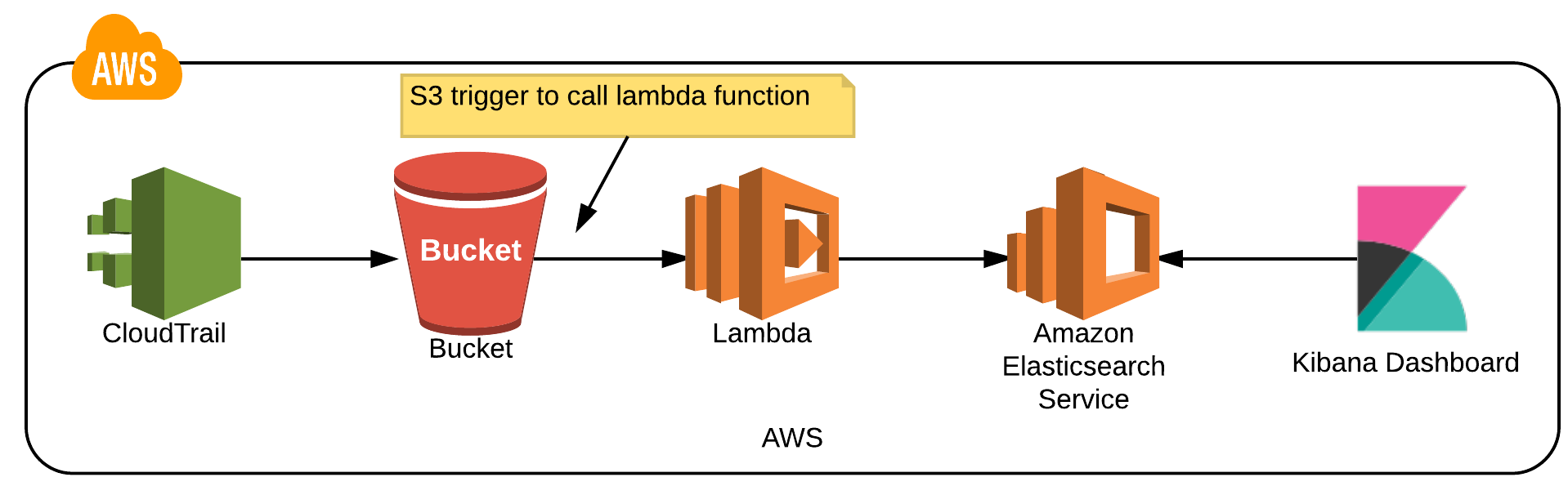

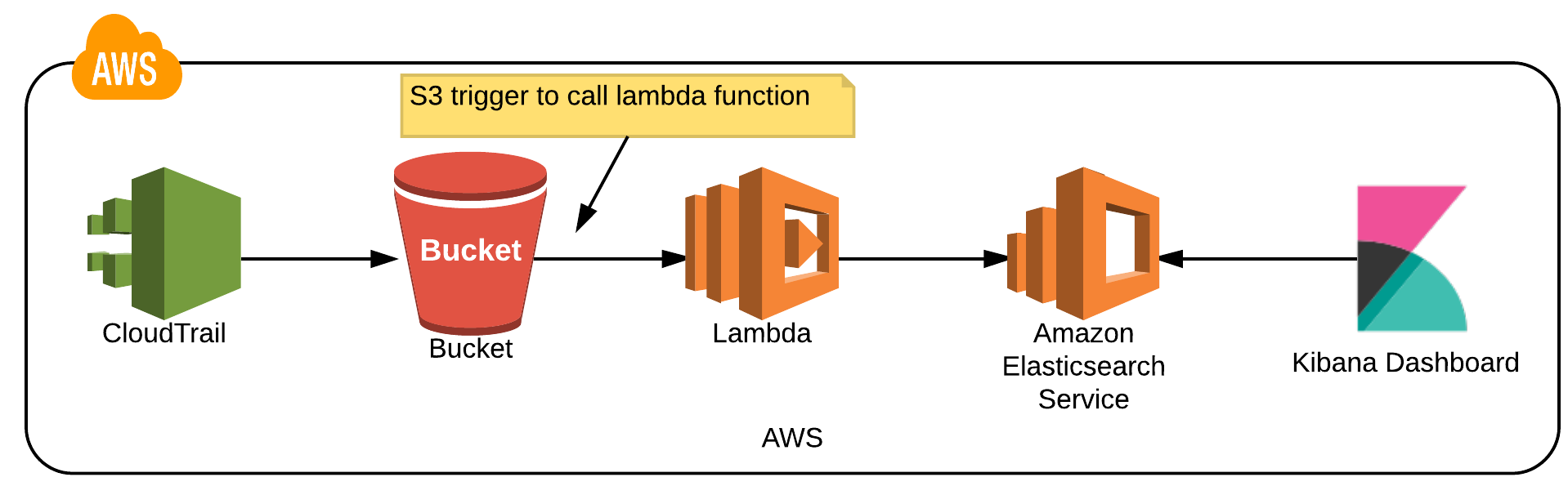

Hence creating Traildash2, a serverless application that takes your cloudtrail logs and pushes them into AWS ElasticSearch and provides a nice dashboard to show off all your handy work!

The default TrailDash2 dashboard

The source code, cloudformation templates for deployment, documentation and deployment steps can all be found over at the github site https://github.com/adcreare/traildash2

The basics of the application is fairly simple. Attach a lambda trigger to the S3 bucket which contains the cloudtrail files. Every-time a new file arrives the lambda will trigger. Each file will be received from S3 and pushed into the elastic search cluster. The custom dashboard then allows that data to be displayed and searched in a more useful way.

TrailDash2 comes from a tool called TrailDash developed by the team from AppliedTrust https://github.com/AppliedTrust/traildash . Unfortunately they have ended the life of their tool and creating your own dashboard from scratch isn't that straight forward.

Longer term I have plans for the lambda function to support alerting on certain event types, probably into an SNS topic. This would provide the administrator a bunch of alarms for certain kinds of events.

Its open source, so feel free to modify and extend the application, I will happily accept pull requests as well.

The conference itself was a two-day event, fantastically well organised with great food and around 400 serverless nerds. The talented team from A Cloud Guru, who first got this serverless conference ball rolling 4 years ago, did an amazing job in Austin. They even had custom made DONUTS for morning tea!!

If you haven't checked out the A Cloud Guy guys (https://acloud.guru), you should. They offer great training for all the AWS certifications among other courses.

There are a few main observations I have from presentations and talking to various attendees.

There are a lot of people who seem to be under the impression that serverless will solve all their problems and is a magic bullet. Those of us who have actually built things at scale on serverless will all tell you, it is an incredibly powerfully tool, but there are still some sharp edges and its not the right tool for everything.

Serverless won't fix poor development practices, yup sorry! It might hide them for awhile, but sooner or later its going to kick you if you build things badly.

There are a LOT of "admin" tools for serverless. At least 5-10 different frameworks for deployment. I guess that happens in the early days of a new platform, everyone wants to build their own tools to manage it. I expect in a few years we'll see that balance out to 2 or 3.

If you go serverless you are going to end up building a distributed system of small services, troubleshooting distributed systems requires careful thought, development of failure/retry queues and careful logging/monitoring, but on the plus side, you do benefit from a microservice architecture. ServerLess or FaaS is introducing some people who might not have been exposed to distributed systems before and wouldn't have otherwise gone and built a microservices architecture. I suspect that's why there is some pain being felt. From a poll taken, the number 1 issue was around monitoring, so I'm sure the AWS's and others will be doing their best to fix that. (AWS has released X-Ray for lambda which is aimed to help with that).

Serverless is clearly building a BIG following and it was clear from the community that I'm not the only one who absolutely loves the serverless technology stack, which is super exciting. The companies attending the conference also did their part well. I didn't see much marketing fluff. To collect the conference t-shirt you also had to collect 10 pins, one from each vendor, good plan! Forced some mingling and I had some of the best and useful conversations I've had with vendors in a long time.

Presentations that made an impact with me and are worth viewing the recording (and when the recordings are available, I will update a link at the top of these post)

Ryan Scott Brown @ryan_sb gave an EXCELLENT trenches talk that anyone building serverless apps should read! All his points are spot on. It helped me consolidate some thinking I've had. I won't comment more except point you to his slides and hopefully a video soon. https://serverlesscode.com/slides/serverlessconf-harmonizing-serverless-traditional-apps.pdf

Mike Roberts @mikebroberts gave a great presentation. He talked about changing from thinking of software development as a "Feature Factory" but instead thinking of it as a "Product Lab". You have to try lots of stuff, most of it will suck and that's ok, dump it and move on. Obviously tools like serverless can provide a path to do this and move quickly. This is similar to the idea of "Developer Anarchy" or "Extreme Agile" that Fred George has spoken of and personally I'm completely on board. I see too many teams these days that go through a sprint creating crap that actually doesn't move the company or their user base forward, just because it is "safe". It never felt right and I'm glad to hear someone talking about it.

Mike has a bunch of links available at: http://bit.ly/symphonia-servlerlessconf-austin and slides http://bit.ly/symphonia-serverlessconf-austin-slides

Randall Hunt @jrhunt from AWS did an epic presentation and wins hands down in the demo and entertainment category. His machine acted up at the start and forced a reboot on him, but he took it all in his stride. If you don't know him, he does the twitch live coding sessions on AWS channel. This presentation showed me what someone who knows what they are doing can do in a fairly short time, I wish AWS got their guys to do more sessions like this. They have some real talent, show it off and give the audience something to look up to, especially the younger crowds and those studying to move into a Development field. Two big thumbs up!

I have to mention the Azure Function/Logic Apps presentations. Chris Anderson really showed off their technology and how they are now a serious player in the serverless market. The dev tool integration and ability to locally attach to and debug serverless function code via VS Code was impressive. Oddly enough I made one tweet: "Watch out lambda. Azure team is showing some very impressive things with their AzureFunctions #Serverlessconf" and promptly had a bucket load of likes all from MS people. You can tell they are really trying hard to catchup to AWS. Based on twitter, maybe too hard.

Charity Majors gave a funny and maybe slightly awkward presentation. She compared the current Serverless situation to a small toddler. We love them deeply, but gee it will be good then they've grown up a little. She challenged some ideas, asked how much do we trust our vendors? Even our cloud providers and finished it all off with reminding that DevOps, is about Ops learning dev... Oh Developers that means you need to learn ops too you know and yes you need ops even when it is serverless.. Sorry..

Last but certainly not least Sam Kroonenburg presented the acloud guru serverless story, what worked, what didn't and rough edges they found! Conferences need more of this, real world, from the trenches presentations that don't skip over the rough edges all projects have. Was very enjoyable to hear his/a cloud guru's journey.

----

To wrap up, it was a great event. Even presentations that didn't make my list were worth the trip over to Austin.

My first time in Austin Texas and the conference gave me the "excuse" to say the weekend!.. Great city! I could live there, easy!!

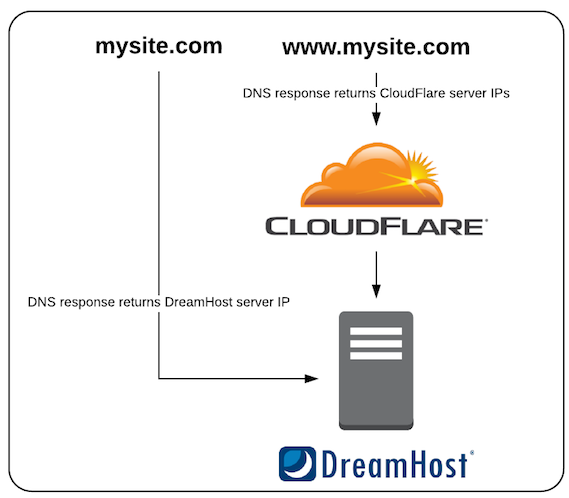

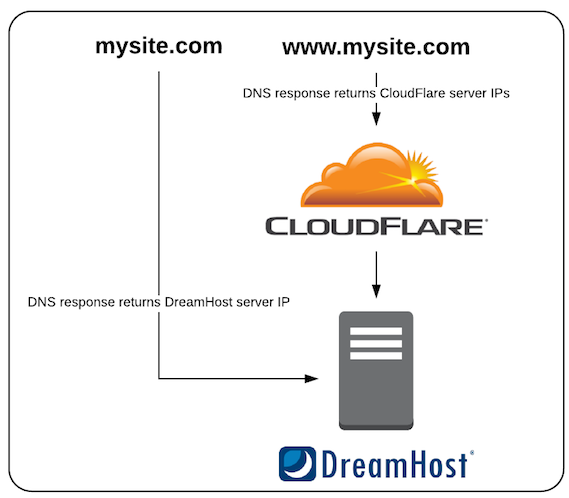

It is a ruby based tool to replicate and keep in sync DNS records from DreamHost to CloudFlare

In 2012 DreamHost announced that they were going to partner with CloudFlare and offer the CloudFlare DNS services at reduced cost and it all could be configured via the DreamHost web panel.

If you enable CloudFlare protection via the DreamHost control panel you will be getting the service cheaper, however you will also be signing yourself up for a bad configuration that allows an attacker to completely bypass CloudFlare's WAF (web application firewall) and DDoS migration capabilities.

The diagram below shows the issue:

The best solution is to purchase your own CloudFlare service and not use the one through the DreamHost portal. When you do this, you will have to replicate your DNS records over from DreamHost to CloudFlare.

This in turn, presents another issue. DreamHost expect to manage your DNS, therefore they make changes your DNS records when they move your account to a new server or update their IP ranges. With your DNS over at CloudFlare these updates won't be live to the public internet and your site will suffer an outage as a result.

The solution to this is to query the DreamHost API and ensure that all DNS records returned exist and match in CloudFlare, this is what Dreamflare was designed to do.

Dreamflare is designed to be run on a regular interval (every few minutes). It will download your current DNS configuration from Dreamhost and match it to the configuration in CloudFlare. If records are missing, it will create them. If records have incorrect values it will update them.

For records that have multiple values (like MX records) it will ensure all the records match and remove any that do not.

In addition it will allow any single A or CNAME records created manually in CloudFlare to remain as long as they do not conflict with a record in DreamHost. Thereby allowing additional records to be created in CloudFlare for other purposes.

The download and install instructions can be found over on the github page https://github.com/adcreare/dreamflare

Give it a try! I'd love to know if this helps someone else out there.

I've also designed the software to be somewhat modular, so in theory it should be easy to add in additional hosting providers who also suffer this issue, assuming they have a restAPI we can query.

Next Page

AWS Elastic Beanstalk deployment failed - invalid byte sequence in UTF-8 - node application

Posted at: 2018-02-27 @ 09:11:52

Recently one of our elastic beanstalk deployments suddenly failed out of the blue with the following error in the EB console.Command failed on instance. Return code: 1 Output: invalid byte sequence in UTF-8. For more detail, check /var/log/eb-activity.log using console or EB CLI.

Looking in said eb-activity.log we have the following line:

in the eb-activity.log

/AppDeployStage0/AppDeployPreHook/50npm.sh] : Activity has unexpected exception, because: invalid byte sequence in UTF-8 (ArgumentError)

This stack is a node application stack and we didn't write this script. So next step was a P1 AWS support ticket, thankfully we have a level of support that results in fast answers.

AWS confirmed it was an issue with ebnode.py file that 50npm.sh calls.

Specifically:

"Elastic Beanstalk passes STDOUT and STDERR of npm install to Python and Ruby based logger scripts. These loggers expect NPM modules to conform to standards and use UTF-8 characters."

The cause

The root cause here was that some dependency we had in our application contains an NPM module that is using some non UTF-8 charaters and that causes EB to break on a deployment of the new version.

The AWS provided solution

The AWS provided workaround is to modify the ebnode.py script to redirect NPM install output to /dev/null

Specifically look for a lines like

check_call([npm_path, '--production', 'install'], cwd=app_path, env=npm_env)

and modify to:

with open(os.devnull, 'w') as devnull:

check_call([npm_path, '--production', 'install'], cwd=app_path, env=npm_env, stdout=devnull)

Then the updated ebnode.py would need to be uploaded into S3 (the elasticbeanstalk-$REGION-$ACCOUNTID bucket probably makes the most sense) and using .ebextensions to pull the new ebnode.py file and overwrite the default one.

If your thinking messy and hard, yes and yes.

How i fixed it

I took our whole application and moved it to a docker container. Then I deployed it to AWS Fargate which is the new "run a container without worrying about hosts" service.

I've been informed that AWS is planning on fixing this, however, sounds like it won't be fixed until they bump the node EB environment again.

So if your running a node application in elastic beanstalk be warned, this could happen to you. In our case, it wasn't even a dependency we updated! It was a dependency of a dependency.

OpenPGP.js how to encrypt files

Posted at: 2018-02-03 @ 07:51:39

Quick post today on using the openpgp.js library to create an encrypted file that is PGP/GPG compatible.This was another one of those things that took me longer to implement than I expected!

I write most of my javascript using typescript these days, so that's what I'm showing in my examples. If you want raw JS, see my GitHub or tsc (type script compiler) the example code.

First, let us look at the encryption.

I'm going to supply the key in a format called ASCII armour .

In this format a public key will start with -----BEGIN PGP PUBLIC KEY BLOCK-----

When using this format, pulling the key in requires using the readArmored method:

const publicKey: string = '-----BEGIN PGP PUBLIC KEY BLOCK-----.......';

openpgp.initWorker({}); // initialise openpgpjs

const openpgpPublicKey = openpgp.key.readArmored(publicKey);

Ok now we need to deal with a file. Unfortunately openpgp.js only supports files in Uint8Array, but fs.readFile by default will return a node buffer. Thankfully this isn't that hard!

const file = fs.readFileSync('/tmp/file-to-be-encryped');

const fileForOpenpgpjs = new Uint8Array(file);

Next, we build the request object for openpgp.js. Note, how we actually reference the .keys item attached to the openpgpPublicKey object response of readArmored key

const options = {

data: fileForOpenpgpjs,

publicKeys: openpgpPublicKey.keys,

armor: false

};

The armor: false - setting tells openpgp js not to ascii armor the file output. Hence, We will get a binary object NOT a string. openpgp does support a full file in ASCII armour, I'm unsure if that would be a good idea for a file of any significant size.

Setup complete, now we perform the actual encryption!

const encryptionResponse = await openpgp.encrypt(options); // note the await here - this is async operation

This performs the encryption, now we need to get the file object out of the system, that requires calling message.packets.write()

const encryptedFile = encryptionResponse.message.packets.write();

Right now we have a constant, encryptedFile that is of type Uint8Array. Lucky for us fs.writeFile supports Uint8Array as an input! So we simply write it back to disk!

fs.writeFileSync('/tmp/file-encryped', encryptedFile);

Phew ok, encryption done.

Guess we'd better be able to decrypt, starts much the same.

As private keys can have many sub keys we have to pick one. Hence .keys[0] on readArmored

There is another new thing, .decrypt. Private pgp keys are encrypted, so we have to perform a decrypt using our private key password.

Lastly, we do the cast from buffer to Uint8Array again on our file read

const privateKey: string = '-----BEGIN PGP PRIVATE KEY BLOCK-----.......';

openpgp.initWorker({});

const openpgpPrivateKeyObject = openpgp.key.readArmored(privateKey).keys[0];

openpgpPrivateKeyObject.decrypt('PRIVATE KEY PASSWORD');

const file = fs.readFileSync('/tmp/file-encryped');

const fileForOpenpgpjs = new Uint8Array(file);

Next is our openpgp request options. Little different to encrypt which took me ages to figure out!

const options = {

privateKey: openpgpPrivateKeyObject,

message: openpgp.message.read(fileForOpenpgpjs),

format: 'binary'

};

Fairly obvious now you see it, right?.. The format binary is important telling openpgp.js that we are dealing with a binary, not a ASCII armoured message.

Now we call the actual decrypt. Again this is an async function, so I'm using await here and the raw binary file will be in the .data attribute contains the Uint8Array that is our file.

This we can write directly back to disk as fs supports Uint8Array as an input.

const decryptionResponse = await openpgp.decrypt(options);

const decryptedFile = decryptionResponse.data

fs.writeFileSync('/tmp/file-encryped-then-decrypted', decryptedFile);

Last but not least, it is probably a good idea to include the following at the start of your file, these operations prevent openpgp.js printing version and comment information into any files.

openpgp.config.show_version = false;

openpgp.config.show_comment = false;

Once you have seen it and had it explained it is not that hard. I ended up working this out by reading the openpgp.js unit test code... Not ideal really, so I hope this helps someone out there!

One word of warning. As we're reading files into buffers here and not handling them as streams (I don't think openpgp.js supports streams) there is a risk we could run the system out of memory on a file larger than available memory. I'd already check input file size vs available memory if you can.

All the source is available at https://github.com/adcreare/nodejs-demos/tree/master/openpgp.js

AWS CodePipeline - Cross account deployments with CloudFormation

Posted at: 2018-01-28 @ 13:55:00

I use CodePipeline with CodeBuild a good amount. It is a good "enough" tool and I don't have to run a Jenkins or Bamboo server so that makes me happy.Recently I started out to build a cross account pipeline, where code would get built in one account, and then deployed to my development, staging and production accounts using CloudFormation.

This was where the trouble started, it is actually, not that straight forward. AWS have documentation on the subject, which is a bit shit frankly.

Then the error messages you receive from CP when this doesn't work, really give zero guidance,

Eventually I managed to get it working and figured I'd write some documentation for myself and so that others attempting this might do it faster and with less frustration than I had.

You can find it over at my github https://github.com/adcreare/cloudformation/tree/master/code-pipeline-cross-account - Code pipeline doesn't support cross account deployments via the console, we are left with either CLI commands or CloudFormation. I want with CloudFormation yml files and this repo contains sample templates, you can use as a starting point for your pipeline.

I also put together a diagram to show how all the pieces connect together, which is the hardest part!! Obviously this can be expanded to N number of accounts by duplicating configuration for additional accounts.

Good luck! Once it is working, its great and works as advertised.

Traildash2 - visualize your AWS cloudtrail logs

Posted at: 2017-07-27 @ 06:49:07

I've been a huge fan of cloudtrail since it was launched. It provides one of the best tools available to see what is happening in your environment. Unfortunately AWS by default doesn't provide a dashboard or a way to actually process these logs. The viewer they give you is almost useless.Hence creating Traildash2, a serverless application that takes your cloudtrail logs and pushes them into AWS ElasticSearch and provides a nice dashboard to show off all your handy work!

The default TrailDash2 dashboard

The source code, cloudformation templates for deployment, documentation and deployment steps can all be found over at the github site https://github.com/adcreare/traildash2

The basics of the application is fairly simple. Attach a lambda trigger to the S3 bucket which contains the cloudtrail files. Every-time a new file arrives the lambda will trigger. Each file will be received from S3 and pushed into the elastic search cluster. The custom dashboard then allows that data to be displayed and searched in a more useful way.

TrailDash2 comes from a tool called TrailDash developed by the team from AppliedTrust https://github.com/AppliedTrust/traildash . Unfortunately they have ended the life of their tool and creating your own dashboard from scratch isn't that straight forward.

Longer term I have plans for the lambda function to support alerting on certain event types, probably into an SNS topic. This would provide the administrator a bunch of alarms for certain kinds of events.

Its open source, so feel free to modify and extend the application, I will happily accept pull requests as well.

Serverless conference 2017 - Austin!

Posted at: 2017-05-02 @ 13:41:41

Recently I attended the 4th annual Serverless conference. This year it was held in Austin Texas in the US and now that I'm based in the US it was easy to come up with the justification to go!

The conference itself was a two-day event, fantastically well organised with great food and around 400 serverless nerds. The talented team from A Cloud Guru, who first got this serverless conference ball rolling 4 years ago, did an amazing job in Austin. They even had custom made DONUTS for morning tea!!

If you haven't checked out the A Cloud Guy guys (https://acloud.guru), you should. They offer great training for all the AWS certifications among other courses.

There are a few main observations I have from presentations and talking to various attendees.

There are a lot of people who seem to be under the impression that serverless will solve all their problems and is a magic bullet. Those of us who have actually built things at scale on serverless will all tell you, it is an incredibly powerfully tool, but there are still some sharp edges and its not the right tool for everything.

Serverless won't fix poor development practices, yup sorry! It might hide them for awhile, but sooner or later its going to kick you if you build things badly.

There are a LOT of "admin" tools for serverless. At least 5-10 different frameworks for deployment. I guess that happens in the early days of a new platform, everyone wants to build their own tools to manage it. I expect in a few years we'll see that balance out to 2 or 3.

If you go serverless you are going to end up building a distributed system of small services, troubleshooting distributed systems requires careful thought, development of failure/retry queues and careful logging/monitoring, but on the plus side, you do benefit from a microservice architecture. ServerLess or FaaS is introducing some people who might not have been exposed to distributed systems before and wouldn't have otherwise gone and built a microservices architecture. I suspect that's why there is some pain being felt. From a poll taken, the number 1 issue was around monitoring, so I'm sure the AWS's and others will be doing their best to fix that. (AWS has released X-Ray for lambda which is aimed to help with that).

Serverless is clearly building a BIG following and it was clear from the community that I'm not the only one who absolutely loves the serverless technology stack, which is super exciting. The companies attending the conference also did their part well. I didn't see much marketing fluff. To collect the conference t-shirt you also had to collect 10 pins, one from each vendor, good plan! Forced some mingling and I had some of the best and useful conversations I've had with vendors in a long time.

Presentations that made an impact with me and are worth viewing the recording (and when the recordings are available, I will update a link at the top of these post)

Ryan Scott Brown @ryan_sb gave an EXCELLENT trenches talk that anyone building serverless apps should read! All his points are spot on. It helped me consolidate some thinking I've had. I won't comment more except point you to his slides and hopefully a video soon. https://serverlesscode.com/slides/serverlessconf-harmonizing-serverless-traditional-apps.pdf

Mike Roberts @mikebroberts gave a great presentation. He talked about changing from thinking of software development as a "Feature Factory" but instead thinking of it as a "Product Lab". You have to try lots of stuff, most of it will suck and that's ok, dump it and move on. Obviously tools like serverless can provide a path to do this and move quickly. This is similar to the idea of "Developer Anarchy" or "Extreme Agile" that Fred George has spoken of and personally I'm completely on board. I see too many teams these days that go through a sprint creating crap that actually doesn't move the company or their user base forward, just because it is "safe". It never felt right and I'm glad to hear someone talking about it.

Mike has a bunch of links available at: http://bit.ly/symphonia-servlerlessconf-austin and slides http://bit.ly/symphonia-serverlessconf-austin-slides

Randall Hunt @jrhunt from AWS did an epic presentation and wins hands down in the demo and entertainment category. His machine acted up at the start and forced a reboot on him, but he took it all in his stride. If you don't know him, he does the twitch live coding sessions on AWS channel. This presentation showed me what someone who knows what they are doing can do in a fairly short time, I wish AWS got their guys to do more sessions like this. They have some real talent, show it off and give the audience something to look up to, especially the younger crowds and those studying to move into a Development field. Two big thumbs up!

I have to mention the Azure Function/Logic Apps presentations. Chris Anderson really showed off their technology and how they are now a serious player in the serverless market. The dev tool integration and ability to locally attach to and debug serverless function code via VS Code was impressive. Oddly enough I made one tweet: "Watch out lambda. Azure team is showing some very impressive things with their AzureFunctions #Serverlessconf" and promptly had a bucket load of likes all from MS people. You can tell they are really trying hard to catchup to AWS. Based on twitter, maybe too hard.

Charity Majors gave a funny and maybe slightly awkward presentation. She compared the current Serverless situation to a small toddler. We love them deeply, but gee it will be good then they've grown up a little. She challenged some ideas, asked how much do we trust our vendors? Even our cloud providers and finished it all off with reminding that DevOps, is about Ops learning dev... Oh Developers that means you need to learn ops too you know and yes you need ops even when it is serverless.. Sorry..

Last but certainly not least Sam Kroonenburg presented the acloud guru serverless story, what worked, what didn't and rough edges they found! Conferences need more of this, real world, from the trenches presentations that don't skip over the rough edges all projects have. Was very enjoyable to hear his/a cloud guru's journey.

----

To wrap up, it was a great event. Even presentations that didn't make my list were worth the trip over to Austin.

My first time in Austin Texas and the conference gave me the "excuse" to say the weekend!.. Great city! I could live there, easy!!

Dreamflare - a tool to sync DNS records from DreamHost to CloudFlare

Posted at: 2017-02-21 @ 03:54:13

Today I'm releasing another bit of software! This one is titled Dreamflare and the git project can be found at https://github.com/adcreare/dreamflareIt is a ruby based tool to replicate and keep in sync DNS records from DreamHost to CloudFlare

In 2012 DreamHost announced that they were going to partner with CloudFlare and offer the CloudFlare DNS services at reduced cost and it all could be configured via the DreamHost web panel.

If you enable CloudFlare protection via the DreamHost control panel you will be getting the service cheaper, however you will also be signing yourself up for a bad configuration that allows an attacker to completely bypass CloudFlare's WAF (web application firewall) and DDoS migration capabilities.

The diagram below shows the issue:

The best solution is to purchase your own CloudFlare service and not use the one through the DreamHost portal. When you do this, you will have to replicate your DNS records over from DreamHost to CloudFlare.

This in turn, presents another issue. DreamHost expect to manage your DNS, therefore they make changes your DNS records when they move your account to a new server or update their IP ranges. With your DNS over at CloudFlare these updates won't be live to the public internet and your site will suffer an outage as a result.

The solution to this is to query the DreamHost API and ensure that all DNS records returned exist and match in CloudFlare, this is what Dreamflare was designed to do.

Dreamflare is designed to be run on a regular interval (every few minutes). It will download your current DNS configuration from Dreamhost and match it to the configuration in CloudFlare. If records are missing, it will create them. If records have incorrect values it will update them.

For records that have multiple values (like MX records) it will ensure all the records match and remove any that do not.

In addition it will allow any single A or CNAME records created manually in CloudFlare to remain as long as they do not conflict with a record in DreamHost. Thereby allowing additional records to be created in CloudFlare for other purposes.

The download and install instructions can be found over on the github page https://github.com/adcreare/dreamflare

Give it a try! I'd love to know if this helps someone else out there.

I've also designed the software to be somewhat modular, so in theory it should be easy to add in additional hosting providers who also suffer this issue, assuming they have a restAPI we can query.

Next Page